BUSINESS & EDUCATION

JUDGEMENT DAY

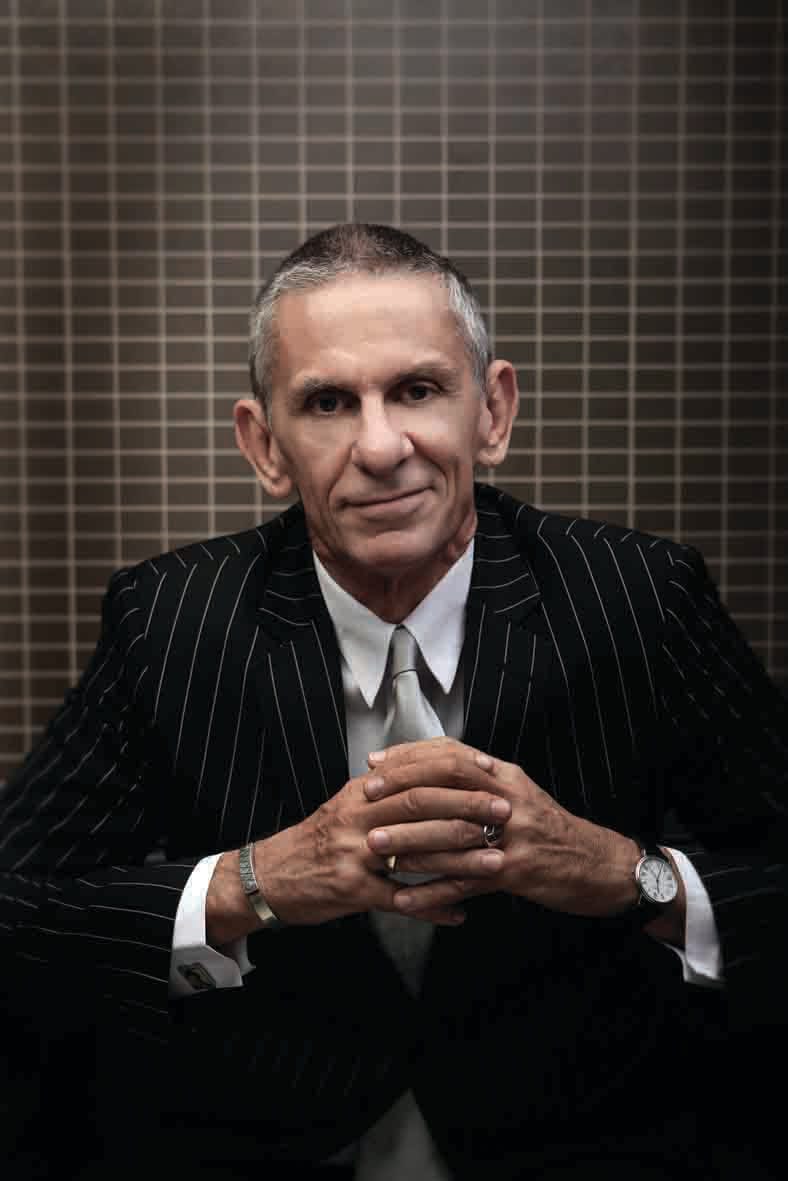

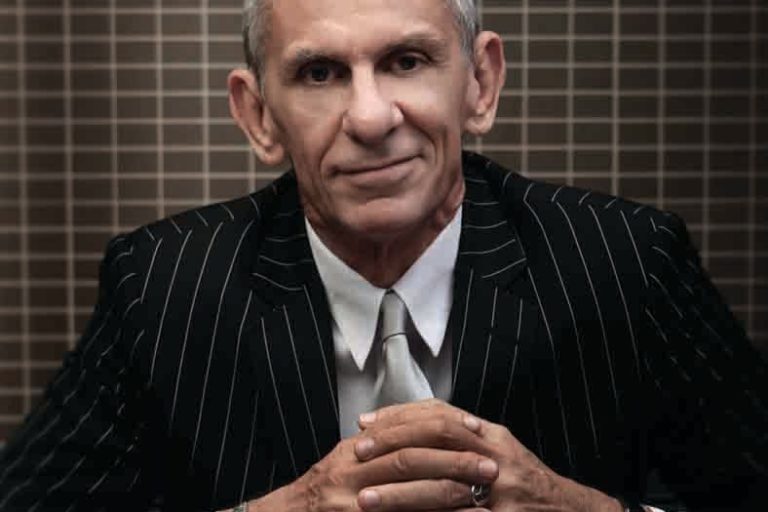

WORDS: PHOTOGRAPHY

Where does technological development end and a nightmare begin?

The other night I had the craziest dream.

Remember Haley Joel Osment? He’s that cute-but-oh-so-creepy little weird kid with the Sad Sack face who kept seeing dead people in M. Night Shymalan’s 1999 supernatural horror-movie The Sixth Sense. If you were as spooked as I definitely was by that things-that-go-bump-in-the-night ghost story, you won’t have forgotten this kid in a hurry. But then, as if The Sixth Sense wasn’t quite spooky enough, in 2001 he backed it up with a dark and contemplative tale called AI Artificial Intelligence, which was even more disturbing. This time he played – surprise, surprise – another cute, creepy little weird kid, only with a slight and distinctly unsettling difference. He’s a robot.

Based on the 1969 short story Super-Toys Last All Summer Long by Brian Aldiss, the screenplay, written in 1995 by Hollywood heavyweight Stephen Spielberg, is set in the late 22nd century, by which time an advanced human society has developed a sophisticated new range of robots called Mecha, capable of human-like thoughts and emotions. When Martin, the only child of affluent couple Henry and Monica Swinton, falls desperately ill and is placed in suspended animation pending a cure, his mother Monica falls into a state of deep mourning. To help her to cope, Martin arranges a replacement – David, a cute, creepy, weird little mini-Mecha that looks exactly like Haley Joel Osment and is programmed to display unquestioning love for its owners. How scary is that?

Naturally, Monica can’t help but tinker with David’s controls, and eventually she activates his imprinting protocol to ensure he will love her forever. It’s a nice thought, and one most of us parents would like to try on our kids, but in this case it backfires badly when, as fate would have it, Martin recovers, and poor little David becomes a third wheel. So he spends the rest of the movie searching for the Blue Fairy, the fictional character from Carlo Collodi’s 1883 book The Adventures of Pinocchio, convinced she can wave her magic wand and turn him into a real boy, just like she did for Pinocchio, so Monica will finally love him and take him home with her.

Yeah, I know. Pretty creepy, right?

So anyway, the other night I woke up wide-eyed in a cold and shivering sweat, dreaming visions of Haley Joel Android, decked out in a judge-wig and fancy judicial robes, staring down from a high courtroom bench with that weird, cutesy, crazy, half-startled stare of his, babbling binary gibberish in my general direction.

What brought on this nightmare? I had been recently reading the news of the development by computer scientists at the University College London of the “Artificial Intelligence Judge”, computer software capable of weighing up evidence, arguments, and weighty dilemmas of right and wrong to accurately predict the verdicts “in four out of five” cases before the European Court of Human Rights. The algorithm the scientists produced examined English language data sets for 584 cases, analysed the information so gleaned, and came to its very own verdict. 79% of its results were the same as the court had delivered. That impressive scorecard had some scientists heralding a breakthrough in the use of AI in the criminal justice process, but a lot of lawyers were left asking the obvious question – what becomes of the poor slob whose case happened to fall in the 21% the computer got wrong?

But it’s an ever-changing world, and there’s no stopping progress. Just last year, the Wisconsin Supreme Court considered an appeal on the permissible use of data analysis in determining criminal cases. In Wisconsin v Loomis, the defendant was convicted of a drive-by shooting. In deciding his sentence the judge used an analytics tool called the Correctional Offender Management Profiling for Alternative Sanctions ( COMPAS) to determine if he was likely to reoffend. By comparing his profile with publicly available data, and thereby building predictive models based on historical correlations, COMPAS assessed the defendant to be at high risk of reoffending. Naturally, he objected indignantly, claiming COMPAS had violated his constitutional right to due process. But the appeal court disagreed, and ruled the judicial use of COMPAS was acceptable, provided full disclosure was made.

Interestingly, the University College London study stressed one of the most reliable factors in its Artificial Intelligence Judge’s prediction of court decisions was the language used in the case texts examined. As a computer illiterate I guess I was moderately comforted to hear that, until I read the other news flooding fresh out of Facebook. According to recent reports researchers at the social media heavyweight lately discovered that its AI system was no longer speaking in English. It turns out the computers decided our language was way too cumbersome, so they invented a more efficient alternative in which to communicate with each other. The fact that no one but the computers understood what the heck they were talking about apparently left them unfazed, but it certainly concerned their human creators. Scientists like Elon Musk and Stephen Hawking have been warning for years that the evolution of robot-to-robot communication could pose a serious threat to humanity, so Facebook wasn’t about to take chances. The system was shut down immediately.

But then, there’s no stopping progress. So, as if real-life judges weren’t artificially intelligent enough, it seems one day we may get a new one-size-fits-all, plastic and silicon model, that talks in a language we don’t understand and is programmed for 79% judicial perfection. Now that’s what I call a nightmare.